简单来说,SetMaxHeap提供了一种可以设置固定触发阈值的 GC(Garbage Collection垃圾回收)方式

官方源码链接 https://go-review.googlesource.com/c/go/+/227767/3

可以解决什么问题?

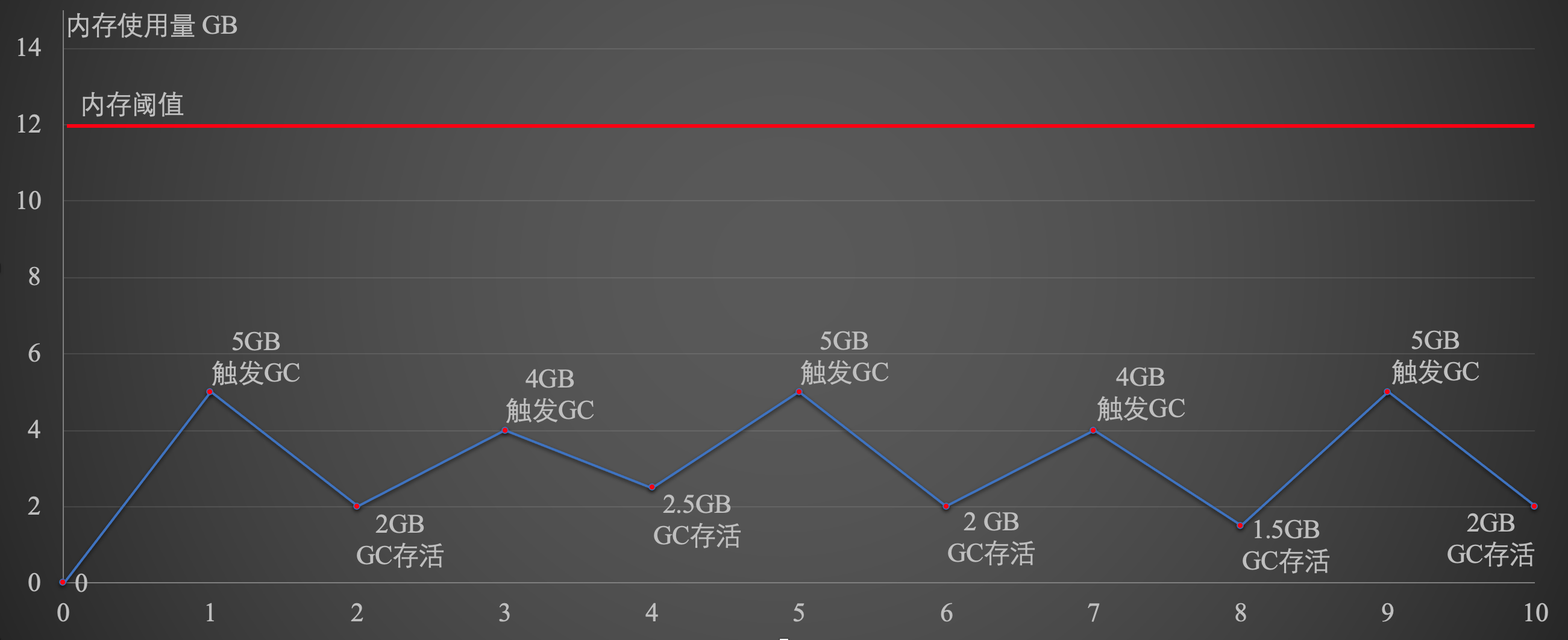

大量临时对象分配导致的GC触发频率过高,GC后实际存活的对象较少,

或者机器内存较充足,希望使用剩余内存,降低GC频率的场景

为什么要降低GC频率?

GC会STW(Stop The World),对于时延敏感场景,在一个周期内连续触发两轮GC,那么STW和GC占用的CPU资源都会造成很大的影响,SetMaxHeap并不一定是完美的,在某些场景下做了些权衡,官方也在进行相关的实验,当前方案仍没有合入主版本。

先看下如果没有SetMaxHeap,对于如上所述的场景的解决方案

这里简单说下GC的几个值的含义,可通过GODEBUG=gctrace=1获得如下数据

gc 16 @1.106s 3%: 0.010+19+0.038 ms clock, 0.21+0.29/95/266+0.76 ms cpu, 128->132->67 MB, 135 MB goal, 20 P

gc 17 @1.236s 3%: 0.010+20+0.040 ms clock, 0.21+0.37/100/267+0.81 ms cpu, 129->132->67 MB, 135 MB goal, 20 P

这里只关注128->132->67 MB 135 MB goal ,

分别为 GC开始时内存使用量 -> GC标记完成时内存使用量 -> GC标记完成时的存活内存量 本轮GC标记完成时的预期内存使用量(上一轮GC完成时确定)

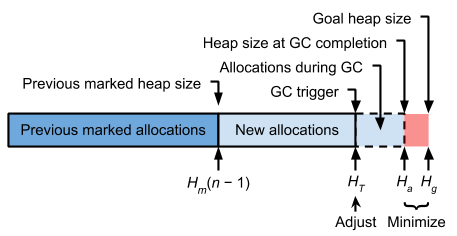

引用GC peace设计文档中的一张图来说明

对应关系如下:

-

GC开始时内存使用量:

GC trigger -

GC标记完成时内存使用量:

Heap size at GC completion -

GC标记完成时的存活内存量:图中标记的

Previous marked heap size为上一轮的GC标记完成时的存活内存量 -

本轮GC标记完成时的预期内存使用量:

Goal heap size

简单说下GC pacing(信用机制)

注:基于自己的理解做了些简化,如有错误还请指出

GC pacing有两个目标,

-

|Ha-Hg|最小 -

GC使用的cpu资源约为总数的25%

那么当一轮GC完成时,如何只根据本轮GC存活量去实现这两个小目标呢?

这里实际是根据当前的一些数据或状态去预估“未来”,所有会存在些误差

首先确定gc Goal goal = memstats.heap_marked + memstats.heap_marked*uint64(gcpercent)/100

heap_marked为本轮GC存活量,gcpercent默认为100,可以通过环境变量GOGC=100或者debug.SetGCPercent(100) 来设置

那么默认情况下 goal = 2 * heap_marked

gc_trigger是与goal相关的一个值(gc_trigger大约为goal的90%左右),每轮GC标记完成时,会根据|Ha-Hg|和实际使用的cpu资源 动态调整gc_trigger与goal的差值

goal与gc_trigger的差值即为,为GC期间分配的对象所预留的空间

GC pacing还会预估下一轮GC发生时,需要扫描对象对象的总量,进而换算为下一轮GC所需的工作量,进而计算出mark assist的值

本轮GC触发(gc_trigger),到本轮的goal期间,需要尽力完成GC mark 标记操作,所以当GC期间,某个goroutine分配大量内存时,就会被拉去做mark assist工作,先进行GC mark标记赚取足够的信用值后,才能分配对应大小的对象

根据本轮GC存活的内存量(heap_marked)和下一轮GC触发的阈值(gc_trigger)计算sweep assist的值,本轮GC完成,到下一轮GC触发(gc_trigger)时,需要尽力刚好完成sweep清扫操作

预估下一轮GC所需的工作量的方式如下:

// heap_scan is the number of bytes of "scannable" heap. This

// is the live heap (as counted by heap_live), but omitting

// no-scan objects and no-scan tails of objects.

//

// Whenever this is updated, call gcController.revise().

heap_scan uint64

// Compute the expected scan work remaining.

//

// This is estimated based on the expected

// steady-state scannable heap. For example, with

// GOGC=100, only half of the scannable heap is

// expected to be live, so that's what we target.

//

// (This is a float calculation to avoid overflowing on

// 100*heap_scan.)

scanWorkExpected := int64(float64(memstats.heap_scan) * 100 / float64(100+gcpercent))

如何解决问题

继续分析文章开头的问题,如何充分利用剩余内存,降低GC频率和GC对CPU的资源消耗

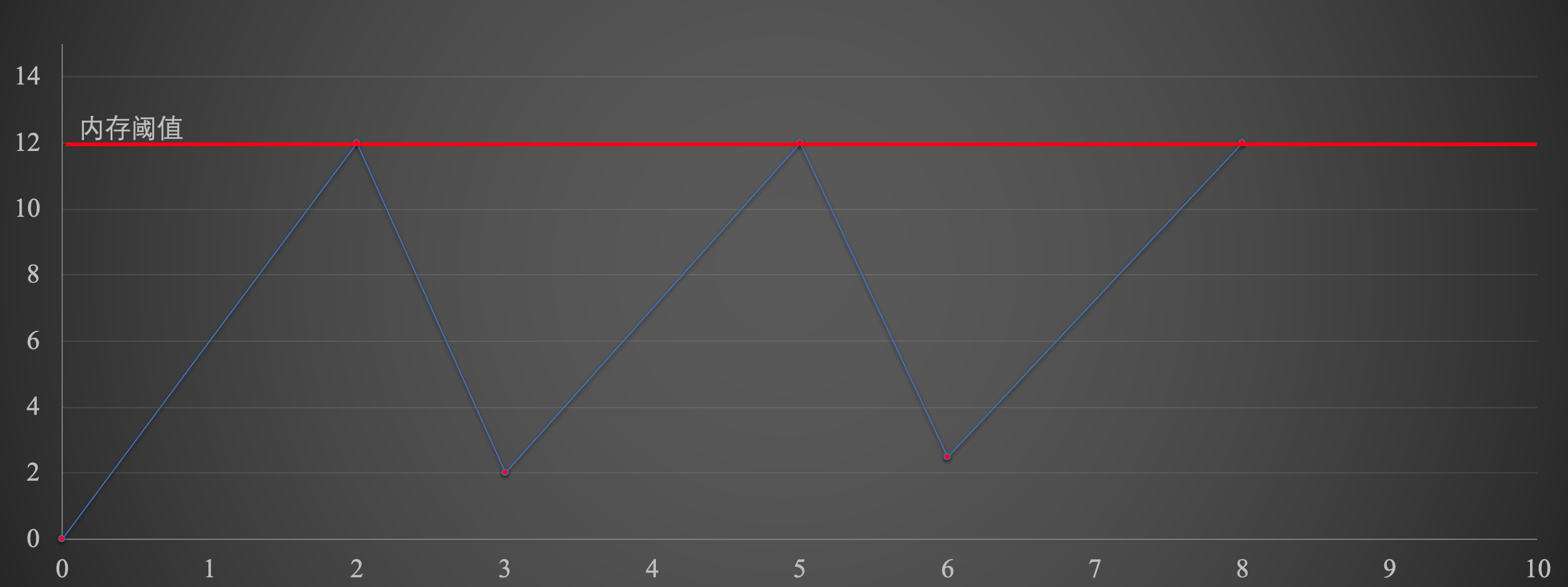

增大 gcpercent?

如上图可以看出,GC后,存活的对象为2GB左右,如果将gcpercent设置为400,那么就可以将下一轮GC触发阈值提升到10GB左右

前面一轮看起来很好,提升了GC触发的阈值到10GB,但是如果某一轮GC后的存活对象到达2.5GB的时候,那么下一轮GC触发的阈值,将会超过内存阈值,造成OOM(Out of Memory),进而导致程序崩溃。

关闭GC,监控堆内存使用状态,手动进行GC?

可以通过GOGC=off或者debug.SetGCPercent(-1)来关闭GC

可以通过进程外监控内存使用状态,使用信号触发的方式通知程序,或ReadMemStats、或linkname runtime.heapRetained等方式进行堆内存使用的监测

可以通过调用runtime.GC()或者debug.FreeOSMemory()来手动进行GC。

这里还需要说几个事情来解释这个方案所存在的问题

通过GOGC=off或者debug.SetGCPercent(-1)是如何关闭GC的?

gc 4 @1.006s 0%: 0.033+5.6+0.024 ms clock, 0.27+4.4/11/25+0.19 ms cpu, 428->428->16 MB, 17592186044415 MB goal, 8 P (forced)

通过GC trace可以看出,上面所说的goal变成了一个很诡异的值17592186044415

实际上关闭GC后,Go会将goal设置为一个极大值^uint64(0),那么对应的GC触发阈值也被调成了一个极大值,这种处理方式看起来也没什么问题,将阈值调大,预期永远不会再触发GC

^uint64(0)>>20 == 17592186044415 MB

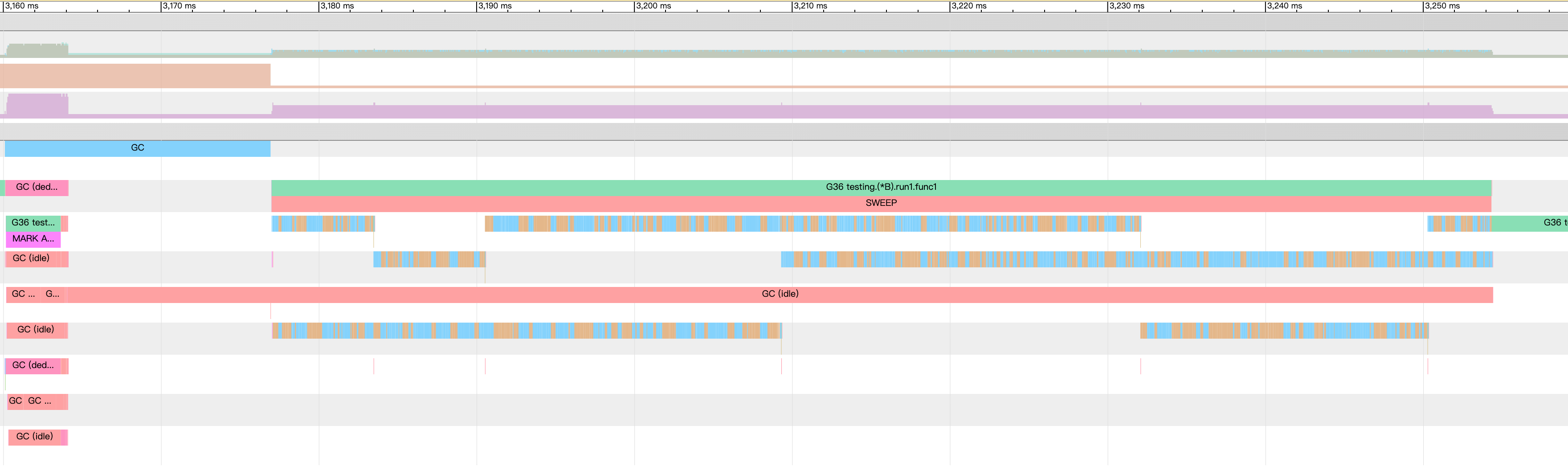

那么如果在关闭GC的情况下,手动调用runtime.GC()会导致什么呢?

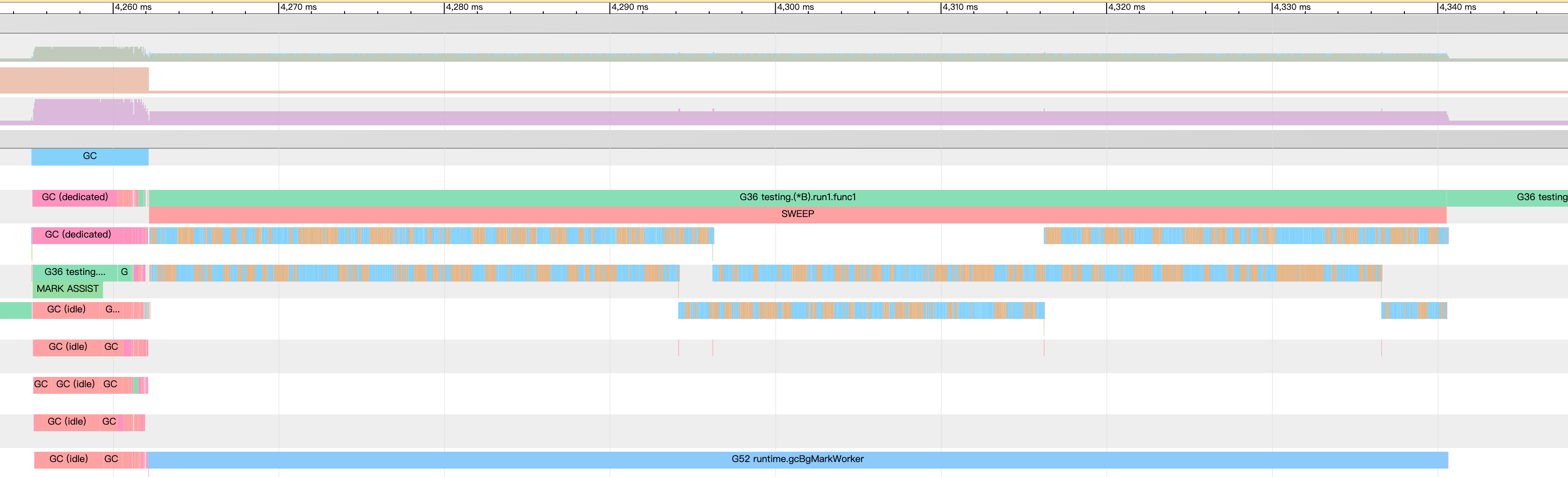

由于goal和gc_trigger被设置成了极大值,而mark assist和sweep assist仍会按照这个错误的值去计算,导致assist的工作量变大,这一点可以从trace中进行证明

可以看到很诡异的trace图,这里不做深究,该方案与GC pacing信用机制不兼容

记住,不要在关闭GC的情况下手动触发GC,至少在当前Go1.14版本中仍存在这个问题

本文的主角-- SetMaxHeap

SetMaxHeap的实现原理,简单来说是强行控制了goal的值

注:SetMaxHeap,本质上是一个软限制,并不能解决极端场景下的OOM,可以配合内存监控和debug.FreeOSMemory()使用

SetMaxHeap控制的是堆内存大小,Go中除了堆内存还分配了如下内存,所以实际使用过程中,与实际硬件内存阈值之间需要留有一部分余量。

stacks_sys uint64 // only counts newosproc0 stack in mstats; differs from MemStats.StackSys

mspan_sys uint64

mcache_sys uint64

buckhash_sys uint64 // profiling bucket hash table

gc_sys uint64 // updated atomically or during STW

other_sys uint64 // updated atomically or during STW

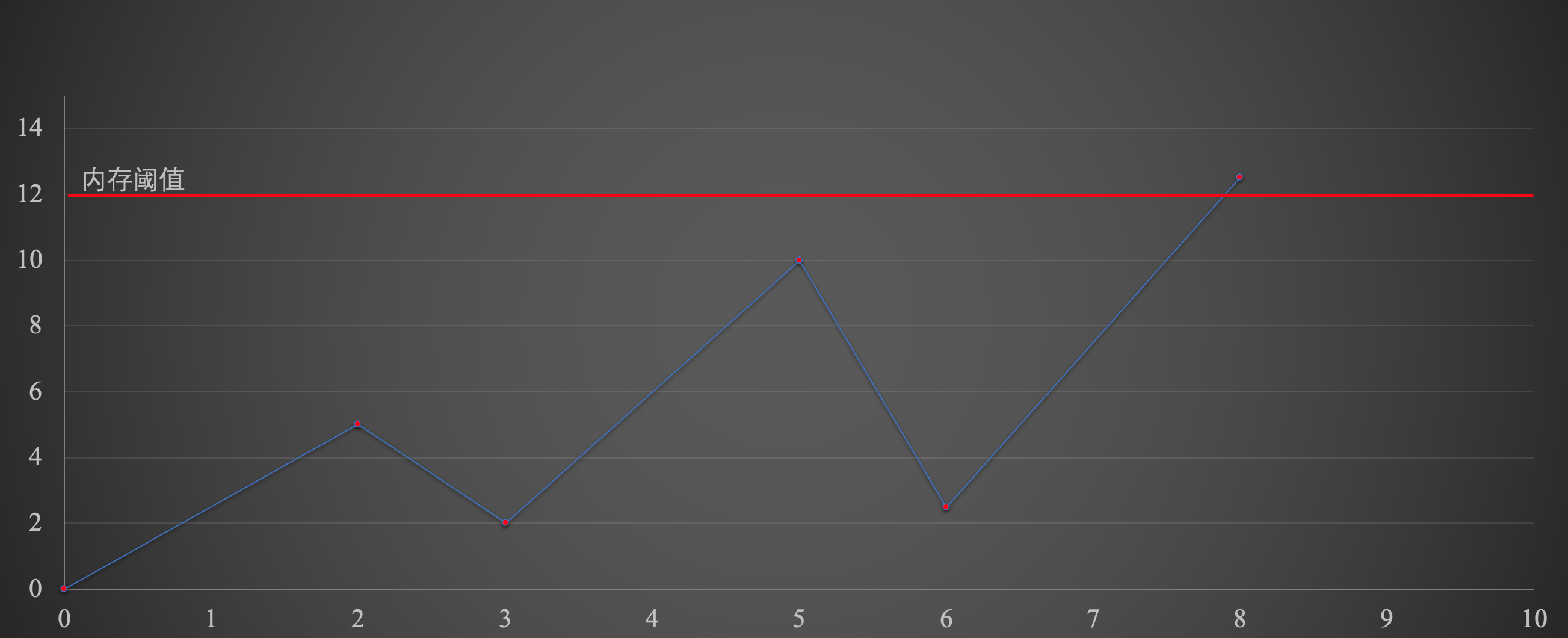

对于文章开始所述问题,使用SetMaxHeap后,预期的GC过程大概是这个样子

简单用法1

notify := make(chan struct{}, 1) // 先不管这个notify

debug.SetMaxHeap(12<<30, notify) // 设置阈值为12GB

debug.SetGCPercent(-1) // 该方案实现过程中对关闭GC的情况做了兼容,可以这样使用

该方法简单粗暴,直接将goal设置为了固定值

注:通过上文所讲,触发GC实际上是gc_trigger,所以当阈值设置为12GB时,会提前一点触发GC,这里为了描述方便,近似认为gc_trigger=goal

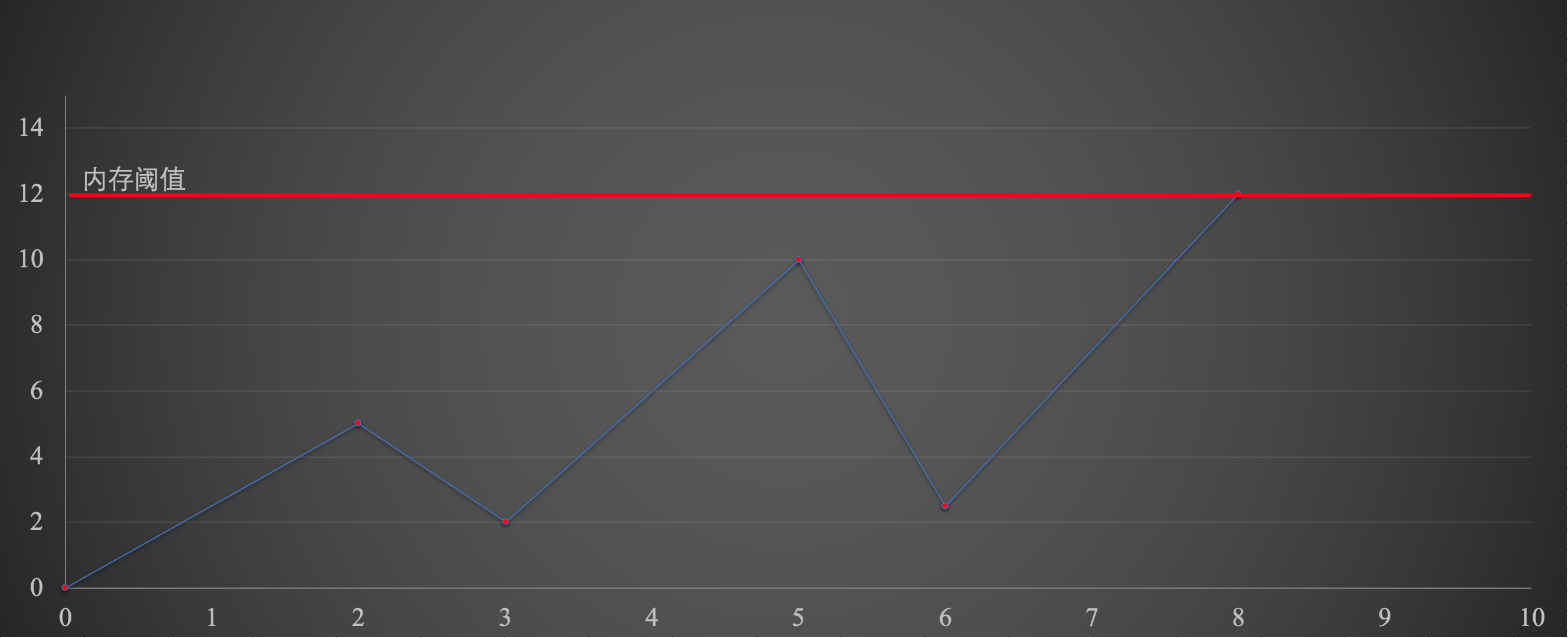

简单用法2

notify := make(chan struct{}, 1) // 先不管这个notify

debug.SetMaxHeap(12<<30, notify) // 设置阈值为12GB

当不关闭GC时,SetMaxHeap的逻辑是,goal仍按照gcpercent进行计算,当goal小于SetMaxHeap阈值时不进行处理;当goal大于SetMaxHeap阈值时,将goal限制为SetMaxHeap阈值

注:通过上文所讲,触发GC实际上是gc_trigger,所以当阈值设置为12GB时,会提前一点触发GC,这里为了描述方便,近似认为gc_trigger=goal

获取代码

切换到go1.14分支,作者选择了git checkout go1.14.5

选择官方提供的cherry-pick方式(可能需要梯子,文件改动不多,我后面会列出具体改动)

git fetch "https://go.googlesource.com/go" refs/changes/67/227767/3 && git cherry-pick FETCH_HEAD

需要重新编译Go源码

实现原理

函数原型

func SetMaxHeap(bytes uintptr, notify chan<- struct{}) uintptr

入参bytes为要设置的阈值

notify 简单理解为 GC的策略 发生变化时会向channel发送通知,后续源码可以看出“策略”具体指哪些内容

Whenever the garbage collector's scheduling policy changes as a result of this heap limit (that is, the result that would be returned by ReadGCPolicy changes), the garbage collector will send to the notify channel.

返回值为本次设置之前的MaxHeap值

官方推荐用法

notify := make(chan struct{}, 1)

var gcp GCPolicy

prev := SetMaxHeap(limit, notify)

// Check that that notified us of heap pressure.

select {

case <-notify:

ReadGCPolicy(&gcp) // 获取变化后的GC策略信息

default:

t.Errorf("missing GC pressure notification")

}

$GOROOT/src/runtime/debug/garbage.go

// SetGCPercent sets the garbage collection target percentage:

// a collection is triggered when the ratio of freshly allocated data

// to live data remaining after the previous collection reaches this percentage.

// SetGCPercent returns the previous setting.

// The initial setting is the value of the GOGC environment variable

// at startup, or 100 if the variable is not set.

// A negative percentage disables triggering garbage collection

// based on the ratio of fresh allocation to previously live heap.

// However, GC can still be explicitly triggered by runtime.GC and

// similar functions, or by the maximum heap size set by SetMaxHeap.

func SetGCPercent(percent int) int {

return int(setGCPercent(int32(percent)))

}

// GCPolicy reports the garbage collector's policy for controlling the

// heap size and scheduling garbage collection work.

type GCPolicy struct {

// GCPercent is the current value of GOGC, as set by the GOGC

// environment variable or SetGCPercent.

//

// If triggering GC by relative heap growth is disabled, this

// will be -1.

GCPercent int

// MaxHeapBytes is the current soft heap limit set by

// SetMaxHeap, in bytes.

//

// If there is no heap limit set, this will be ^uintptr(0).

MaxHeapBytes uintptr

// AvailGCPercent is the heap space available for allocation

// before the next GC, as a percent of the heap used at the

// end of the previous garbage collection. It measures memory

// pressure and how hard the garbage collector must work to

// achieve the heap size goals set by GCPercent and

// MaxHeapBytes.

//

// For example, if AvailGCPercent is 100, then at the end of

// the previous garbage collection, the space available for

// allocation before the next GC was the same as the space

// used. If AvailGCPercent is 20, then the space available is

// only a 20% of the space used.

//

// AvailGCPercent is directly comparable with GCPercent.

//

// If AvailGCPercent >= GCPercent, the garbage collector is

// not under pressure and can amortize the cost of garbage

// collection by allowing the heap to grow in proportion to

// how much is used.

//

// If AvailGCPercent < GCPercent, the garbage collector is

// under pressure and must run more frequently to keep the

// heap size under MaxHeapBytes. Smaller values of

// AvailGCPercent indicate greater pressure. In this case, the

// application should shed load and reduce its live heap size

// to relieve memory pressure.

//

// AvailGCPercent is always >= 0.

AvailGCPercent int

}

// 只是针对Go堆内存的软限制,默认不开启

// SetMaxHeap sets a soft limit on the size of the Go heap and returns

// the previous setting. By default, there is no limit.

//

// If a max heap is set, the garbage collector will endeavor to keep

// the heap size under the specified size, even if this is lower than

// would normally be determined by GOGC (see SetGCPercent).

//

// 当由于SetMaxHeap导致垃圾收集器的策略发生变化(ReadGCPolicy返回的结果发生更改)时,

// 会向notify发生通知

// Whenever the garbage collector's scheduling policy changes as a

// result of this heap limit (that is, the result that would be

// returned by ReadGCPolicy changes), the garbage collector will send

// to the notify channel. This is a non-blocking send, so this should

// be a single-element buffered channel, though this is not required.

// Only a single channel may be registered for notifications at a

// time; SetMaxHeap replaces any previously registered channel.

//

// The application is strongly encouraged to respond to this

// notification by calling ReadGCPolicy and, if AvailGCPercent is less

// than GCPercent, shedding load to reduce its live heap size. Setting

// a maximum heap size limits the garbage collector's ability to

// amortize the cost of garbage collection when the heap reaches the

// heap size limit. This is particularly important in

// request-processing systems, where increasing pressure on the

// garbage collector reduces CPU time available to the application,

// making it less able to complete work, leading to even more pressure

// on the garbage collector. The application must shed load to avoid

// this "GC death spiral".

//

// The limit set by SetMaxHeap is soft. If the garbage collector would

// consume too much CPU to keep the heap under this limit (leading to

// "thrashing"), it will allow the heap to grow larger than the

// specified max heap.

//

// 堆内存大小不是包括进程内存占用的所有内容,它不包括stacks,C分配的内存和许多runtime内部使用的结构。

// The heap size does not include everything in the process's memory

// footprint. Notably, it does not include stacks, C-allocated memory,

// or many runtime-internal structures.

//

// 当bytes== ^uintptr(0)时,关闭SetMaxHeap,仅当这种情况下notify可以为nil

// To disable the heap limit, pass ^uintptr(0) for the bytes argument.

// In this case, notify can be nil.

//

// 如果只依赖SetMaxHeap去触发过GC,在设置阈值后调用SetGCPercent(-1)来关闭原生GC

// To depend only on the heap limit to trigger garbage collection,

// call SetGCPercent(-1) after setting a heap limit.

func SetMaxHeap(bytes uintptr, notify chan<- struct{}) uintptr {

// 关闭该功能

if bytes == ^uintptr(0) {

return gcSetMaxHeap(bytes, nil)

}

// 开启该功能时,应传入一个notify

if notify == nil {

panic("SetMaxHeap requires a non-nil notify channel")

}

return gcSetMaxHeap(bytes, notify)

}

// gcSetMaxHeap is provided by package runtime.

// 在这里只进行函数定义,在runtime中实现具体功能

func gcSetMaxHeap(bytes uintptr, notify chan<- struct{}) uintptr

// ReadGCPolicy reads the garbage collector's current policy for

// managing the heap size. This includes static settings controlled by

// the application and dynamic policy determined by heap usage.

// ReadGCPolicy读取垃圾收集器当前的用于管理堆大小的策略,

// 这包括由应用程序控制的静态设置和由堆使用情况确定的动态策略,后面可以看下具体返回的是什么

func ReadGCPolicy(gcp *GCPolicy) {

gcp.GCPercent, gcp.MaxHeapBytes, gcp.AvailGCPercent = gcReadPolicy()

}

// gcReadPolicy is provided by package runtime.

// 在这里只进行函数定义,在runtime中实现具体功能

func gcReadPolicy() (gogc int, maxHeap uintptr, egogc int)

$GOROOT/src/runtime/mgc.go

// To begin with, maxHeap is infinity.

// 默认关闭,为极大值

var maxHeap uintptr = ^uintptr(0)

func gcinit() {

if unsafe.Sizeof(workbuf{}) != _WorkbufSize {

throw("size of Workbuf is suboptimal")

}

// No sweep on the first cycle.

mheap_.sweepdone = 1

// Set a reasonable initial GC trigger.

memstats.triggerRatio = 7 / 8.0

// Fake a heap_marked value so it looks like a trigger at

// heapminimum is the appropriate growth from heap_marked.

// This will go into computing the initial GC goal.

memstats.heap_marked = uint64(float64(heapminimum) / (1 + memstats.triggerRatio))

// Disable heap limit initially.

// 默认关闭

gcPressure.maxHeap = ^uintptr(0)

// Set gcpercent from the environment. This will also compute

// and set the GC trigger and goal.

_ = setGCPercent(readgogc())

work.startSema = 1

work.markDoneSema = 1

}

//go:linkname setGCPercent runtime/debug.setGCPercent

func setGCPercent(in int32) (out int32) {

// Run on the system stack since we grab the heap lock.

var updated bool

systemstack(func() {

lock(&mheap_.lock)

out = gcpercent

if in < 0 {

in = -1

}

gcpercent = in

// 为了做兼容,gcpercent<0时,不再将goal设置为极大值

if gcpercent >= 0 {

heapminimum = defaultHeapMinimum * uint64(gcpercent) / 100

} else {

heapminimum = 0

}

// Update pacing in response to gcpercent change.

updated = gcSetTriggerRatio(memstats.triggerRatio)

unlock(&mheap_.lock)

})

// Pacing changed, so the scavenger should be awoken.

wakeScavenger()

// 如果开启了SetMaxHeap,并且发生变化时,则向notify发送通知

if updated {

gcPolicyNotify()

}

// If we just disabled GC, wait for any concurrent GC mark to

// finish so we always return with no GC running.

if in < 0 {

gcWaitOnMark(atomic.Load(&work.cycles))

}

return out

}

// 新增SetMaxHeap相关内部变量

var gcPressure struct {

// lock may be acquired while mheap_.lock is held. Hence, it

// must only be acquired from the system stack.

lock mutex

// notify is a notification channel for GC pressure changes

// with a notification sent after every gcSetTriggerRatio.

// It is provided by package debug. It may be nil.

notify chan<- struct{}

// Together gogc, maxHeap, and egogc represent the GC policy.

//

// gogc is GOGC, maxHeap is the GC heap limit, and egogc is the effective GOGC.

// gogc,maxHeap和egogc一起代表了GC策略

// gogc是GOGC,maxHeap是GC堆限制,egogc是有效的GOGC

//

// These are set by the user with debug.SetMaxHeap. GC will

// attempt to keep heap_live under maxHeap, even if it has to

// violate GOGC (up to a point).

gogc int

maxHeap uintptr

egogc int

}

//go:linkname gcSetMaxHeap runtime/debug.gcSetMaxHeap

// 通过linkname的方式,在这里实现debug包中的gcSetMaxHeap函数内容

func gcSetMaxHeap(bytes uintptr, notify chan<- struct{}) uintptr {

var (

prev uintptr

updated bool

)

systemstack(func() {

// gcPressure.notify has a write barrier on it so it must be protected

// by gcPressure's lock instead of mheap's, otherwise we could deadlock.

// 加锁,获取设置之前的阈值,将bytes设置到maxHeap中

lock(&gcPressure.lock)

gcPressure.notify = notify

unlock(&gcPressure.lock)

lock(&mheap_.lock)

// Update max heap.

prev = maxHeap

maxHeap = bytes

// Update pacing. This will update gcPressure from the

// globals gcpercent and maxHeap.

updated = gcSetTriggerRatio(memstats.triggerRatio)

unlock(&mheap_.lock)

})

if updated {

gcPolicyNotify()

}

// 返回设置之前的阈值

return prev

}

// gcSetTriggerRatio sets the trigger ratio and updates everything

// derived from it: the absolute trigger, the heap goal, mark pacing,

// and sweep pacing.

//

// This can be called any time. If GC is the in the middle of a

// concurrent phase, it will adjust the pacing of that phase.

//

// This depends on gcpercent, mheap_.maxHeap, memstats.heap_marked,

// and memstats.heap_live. These must be up to date.

//

// Returns whether or not there was a change in the GC policy.

// If it returns true, the caller must call gcPolicyNotify() after

// releasing the heap lock.

//

// mheap_.lock must be held or the world must be stopped.

//

// This must be called on the system stack because it acquires

// gcPressure.lock.

//

//go:systemstack

// 新增返回值changed,当返回true时会向notify发送通知

// 该函数会在gcSetMaxHeap、setGCPercent、gcMarkTermination中调用

// gcSetMaxHeap、setGCPercent只会被主动调用;gcMarkTermination在每轮GC的STW2期间被调用

func gcSetTriggerRatio(triggerRatio float64) (changed bool) {

// Since GOGC ratios are in terms of heap_marked, make sure it

// isn't 0. This shouldn't happen, but if it does we want to

// avoid infinities and divide-by-zeroes.

if memstats.heap_marked == 0 {

memstats.heap_marked = 1

}

// Compute the next GC goal, which is when the allocated heap

// has grown by GOGC/100 over the heap marked by the last

// cycle, or maxHeap, whichever is lower.

// 先根据gcpercent计算goal值

goal := ^uint64(0)

if gcpercent >= 0 {

goal = memstats.heap_marked + memstats.heap_marked*uint64(gcpercent)/100

}

lock(&gcPressure.lock)

// 如果开启了SetMaxHeap功能,并且goal大于设置的阈值,强行修改goal

if gcPressure.maxHeap != ^uintptr(0) && goal > uint64(gcPressure.maxHeap) { // Careful of 32-bit uintptr!

// Use maxHeap-based goal.

goal = uint64(gcPressure.maxHeap)

unlock(&gcPressure.lock)

// Avoid thrashing by not letting the

// effective GOGC drop below 10.

//

// TODO(austin): This heuristic is pulled from

// thin air. It might be better to do

// something to more directly force

// amortization of GC costs, e.g., by limiting

// what fraction of the time GC can be active.

var minGOGC uint64 = 10

if gcpercent >= 0 && uint64(gcpercent) < minGOGC {

// The user explicitly requested

// GOGC < minGOGC. Use that.

minGOGC = uint64(gcpercent)

}

lowerBound := memstats.heap_marked + memstats.heap_marked*minGOGC/100

if goal < lowerBound {

goal = lowerBound

}

} else {

unlock(&gcPressure.lock)

}

// Set the trigger ratio, capped to reasonable bounds.

if triggerRatio < 0 {

// This can happen if the mutator is allocating very

// quickly or the GC is scanning very slowly.

triggerRatio = 0

} else if gcpercent >= 0 && triggerRatio > float64(gcpercent)/100 {

// Cap trigger ratio at GOGC/100.

triggerRatio = float64(gcpercent) / 100

}

memstats.triggerRatio = triggerRatio

// Compute the absolute GC trigger from the trigger ratio.

//

// We trigger the next GC cycle when the allocated heap has

// grown by the trigger ratio over the marked heap size.

trigger := ^uint64(0)

// 计算gc_trigger

if goal != ^uint64(0) {

trigger = uint64(float64(memstats.heap_marked) * (1 + triggerRatio))

// Ensure there's always a little margin so that the

// mutator assist ratio isn't infinity.

if trigger > goal*95/100 {

trigger = goal * 95 / 100

}

// If we let triggerRatio go too low, then if the application

// is allocating very rapidly we might end up in a situation

// where we're allocating black during a nearly always-on GC.

// The result of this is a growing heap and ultimately an

// increase in RSS. By capping us at a point >0, we're essentially

// saying that we're OK using more CPU during the GC to prevent

// this growth in RSS.

//

// The current constant was chosen empirically: given a sufficiently

// fast/scalable allocator with 48 Ps that could drive the trigger ratio

// to <0.05, this constant causes applications to retain the same peak

// RSS compared to not having this allocator.

const minTriggerRatio = 0.6

minTrigger := memstats.heap_marked + uint64(minTriggerRatio*float64(goal-memstats.heap_marked))

if trigger < minTrigger {

trigger = minTrigger

}

// Don't trigger below the minimum heap size.

minTrigger = heapminimum

if !isSweepDone() {

// Concurrent sweep happens in the heap growth

// from heap_live to gc_trigger, so ensure

// that concurrent sweep has some heap growth

// in which to perform sweeping before we

// start the next GC cycle.

sweepMin := atomic.Load64(&memstats.heap_live) + sweepMinHeapDistance

if sweepMin > minTrigger {

minTrigger = sweepMin

}

}

if trigger < minTrigger {

trigger = minTrigger

}

if int64(trigger) < 0 {

print("runtime: next_gc=", memstats.next_gc, " heap_marked=", memstats.heap_marked, " heap_live=", memstats.heap_live, " initialHeapLive=", work.initialHeapLive, "triggerRatio=", triggerRatio, " minTrigger=", minTrigger, "\n")

throw("gc_trigger underflow")

}

if trigger > goal {

// The trigger ratio is always less than GOGC/100, but

// other bounds on the trigger may have raised it.

// Push up the goal, too.

goal = trigger

}

}

// Commit to the trigger and goal.

memstats.gc_trigger = trigger

memstats.next_gc = goal

if trace.enabled {

traceNextGC()

}

// Update mark pacing.

if gcphase != _GCoff {

gcController.revise()

}

// Update sweep pacing.

if isSweepDone() {

mheap_.sweepPagesPerByte = 0

} else {

// Concurrent sweep needs to sweep all of the in-use

// pages by the time the allocated heap reaches the GC

// trigger. Compute the ratio of in-use pages to sweep

// per byte allocated, accounting for the fact that

// some might already be swept.

heapLiveBasis := atomic.Load64(&memstats.heap_live)

heapDistance := int64(trigger) - int64(heapLiveBasis)

// Add a little margin so rounding errors and

// concurrent sweep are less likely to leave pages

// unswept when GC starts.

heapDistance -= 1024 * 1024

if heapDistance < _PageSize {

// Avoid setting the sweep ratio extremely high

heapDistance = _PageSize

}

pagesSwept := atomic.Load64(&mheap_.pagesSwept)

pagesInUse := atomic.Load64(&mheap_.pagesInUse)

sweepDistancePages := int64(pagesInUse) - int64(pagesSwept)

if sweepDistancePages <= 0 {

mheap_.sweepPagesPerByte = 0

} else {

mheap_.sweepPagesPerByte = float64(sweepDistancePages) / float64(heapDistance)

mheap_.sweepHeapLiveBasis = heapLiveBasis

// Write pagesSweptBasis last, since this

// signals concurrent sweeps to recompute

// their debt.

atomic.Store64(&mheap_.pagesSweptBasis, pagesSwept)

}

}

gcPaceScavenger()

// Update the GC policy due to a GC pressure change.

lock(&gcPressure.lock)

gogc, maxHeap, egogc := gcReadPolicyLocked()

// 如果gogc、maxHeap、egogc中的任何一个值与上次调用gcSetTriggerRatio时的值不一样,则返回true

if gogc != gcPressure.gogc || maxHeap != gcPressure.maxHeap || egogc != gcPressure.egogc {

gcPressure.gogc, gcPressure.maxHeap, gcPressure.egogc = gogc, maxHeap, egogc

changed = true

}

unlock(&gcPressure.lock)

return

}

// Sends a non-blocking notification on gcPressure.notify.

//

// mheap_.lock and gcPressure.lock must not be held.

// 简单做些判断后,向notify以非阻塞的方式发送通知

func gcPolicyNotify() {

// Switch to the system stack to acquire gcPressure.lock.

var n chan<- struct{}

gp := getg()

systemstack(func() {

lock(&gcPressure.lock)

if gcPressure.notify == nil {

unlock(&gcPressure.lock)

return

}

if raceenabled {

// notify is protected by gcPressure.lock, but

// the race detector can't see that.

raceacquireg(gp, unsafe.Pointer(&gcPressure.notify))

}

// Just grab the channel first so that we're holding as

// few locks as possible when we actually make the channel send.

n = gcPressure.notify

if raceenabled {

racereleaseg(gp, unsafe.Pointer(&gcPressure.notify))

}

unlock(&gcPressure.lock)

})

if n == nil {

return

}

// Perform a non-blocking send on the channel.

select {

case n <- struct{}{}:

default:

}

}

//go:linkname gcReadPolicy runtime/debug.gcReadPolicy

func gcReadPolicy() (gogc int, maxHeap uintptr, egogc int) {

systemstack(func() {

lock(&mheap_.lock)

gogc, maxHeap, egogc = gcReadPolicyLocked()

unlock(&mheap_.lock)

})

return

}

// mheap_.lock must be locked, therefore this must be called on the

// systemstack.

//go:systemstack

// 按照前面的注释所述,gogc是GOGC,maxHeap是GC堆限制,egogc是有效的GOGC

func gcReadPolicyLocked() (gogc int, maxHeapOut uintptr, egogc int) {

// 获取计算出的实际goal值

goal := memstats.next_gc

// 如果goal小于阈值,并且没有关闭GC,那么是按照正常的gcpercent计算的,没有进行干预

// 参考简单用法2,时间点5时的GC触发值,返回gcpercent

if goal < uint64(maxHeap) && gcpercent >= 0 {

// We're not up against the max heap size, so just

// return GOGC.

egogc = int(gcpercent)

} else {

// Back out the effective GOGC from the goal.

// 获取实际情况的gcpercent

egogc = int(gcEffectiveGrowthRatio() * 100)

// The effective GOGC may actually be higher than

// gcpercent if the heap is tiny. Avoid that confusion

// and just return the user-set GOGC.

// 当开启GC,并且实际GOGC大于设置的gcpercent时,仍然返回设置的gcpercent

if gcpercent >= 0 && egogc > int(gcpercent) {

egogc = int(gcpercent)

}

}

// 返回的三个值分别为 设置的gcpercent、设置的maxHeap、当前实际的gcpercent

return int(gcpercent), maxHeap, egogc

}

func gcEffectiveGrowthRatio() float64 {

// 计算实际的 gcpercent,根据本轮GC存活对象和下一轮GC的goal

// `goal = memstats.heap_marked + memstats.heap_marked*uint64(gcpercent)/100` 这个公式的逆运算

egogc := float64(memstats.next_gc-memstats.heap_marked) / float64(memstats.heap_marked)

if egogc < 0 {

// Shouldn't happen, but just in case.

egogc = 0

}

return egogc

}

// test reports whether the trigger condition is satisfied, meaning

// that the exit condition for the _GCoff phase has been met. The exit

// condition should be tested when allocating.

// 这个函数是计算是否需要触发GC的,返回true时会调用gcStart 进行GC

func (t gcTrigger) test() bool {

if !memstats.enablegc || panicking != 0 || gcphase != _GCoff {

return false

}

switch t.kind {

case gcTriggerHeap:

// Non-atomic access to heap_live for performance. If

// we are going to trigger on this, this thread just

// atomically wrote heap_live anyway and we'll see our

// own write.

return memstats.heap_live >= memstats.gc_trigger

case gcTriggerTime:

if gcpercent < 0 && gcPressure.maxHeap == ^uintptr(0) {

return false

}

// 如果设置了debug.SetMaxHeap,即使配置debug.SetGCPercent(-1)时,超过2分钟没有触发GC时,仍然触发GC

lastgc := int64(atomic.Load64(&memstats.last_gc_nanotime))

return lastgc != 0 && t.now-lastgc > forcegcperiod

case gcTriggerCycle:

// t.n > work.cycles, but accounting for wraparound.

return int32(t.n-work.cycles) > 0

}

return true

}

func gcMarkTermination(nextTriggerRatio float64) {

……

……

// Update GC trigger and pacing for the next cycle.

var notify bool

systemstack(func() {

notify = gcSetTriggerRatio(nextTriggerRatio)

})

if notify {

gcPolicyNotify()

}

……

……

}

几个注意点:

是对于Go堆内存的软限制

设置后debug.SetMaxHeap,即使关闭GC,sysmon监控到超过2分钟没有触发GC时,仍会触发GC

注:作者尽量用通俗易懂的语言去解释Go的一些机制和SetMaxHeap功能,可能有些描述与实现细节不完全一致

转载请注明出处,谢谢

本文由 LeonardWang 创作,采用 知识共享署名4.0

国际许可协议进行许可

本站文章除注明转载/出处外,均为本站原创或翻译,转载前请务必署名

最后编辑时间为: Jun 10,2024